Authors

TL;DR

- Full Unstructured functionality available programmatically: All functionality available in the Unstructured UI is now available programmatically via Unstructured API

- Two Unstructured API endpoints:

- Unstructured Partition Endpoint: supersedes our previous Serverless API and now includes VLM partitioning capabilities.

- 🆕 Unstructured Workflow Endpoint: an entirely new endpoint, enabling users to programmatically connect, partition, chunk, generate structured data, embed, and write data to destinations on a set schedule.

- MCP-ready: Unstructured API enables building MCP servers for natural language interaction for GenAI ETL with Unstructured.

- Get Unstructured access and your API key here

- Read the Docs here: - Working with Partition Endpoint directly- Working with Partition Endpoint via Python SDK- Workflow Endpoint

The Challenge of Unstructured Data in GenAI

When putting Generative AI (GenAI) into production, one of the toughest challenges is the data layer. 80% of enterprise data lives in unstructured and difficult-to-access formats and stores. Traditional extract, transform, and load (ETL) solutions aren't designed to render raw unstructured data into large language model (LLM) compatible formats, forcing engineers to manually batch process their data. This can lead to a rat’s nest of point solutions, custom scripts, and manual processes.

Unstructured: The Complete ETL+ Solution

Unstructured solves this problem with ETL+. We start with ETL for GenAI: continuously harvesting newly generated unstructured data from systems of record, transforming it into LLM-ready formats using our optimized, pre-built pipelines, and writing it to downstream locations such as vector or graph databases. Then we add the +: all the features enterprise users need to eliminate ETL headaches and stay focused on applications that drive their business forward.

Our initial solution released in December focused on the Unstructured UI with limited API functionality. Now, we're expanding access to our full platform capabilities through a comprehensive API that enables Model Context Protocol (MCP) integrations, unlocking direct natural language interaction between LLMs and your unstructured data and ETL pipelines.

With Unstructured, you can deploy ingestion and preprocessing pipelines in seconds. Our system automatically transforms complex, unstructured data into clean, structured data for GenAI applications while optimizing the workflow with the latest developments in ETL. With horizontal scaling and 300x concurrency per organization, we offer unmatched processing speed. New image-to-text, text-to-text, and text-to-embedding models are added weekly, ensuring you can tailor pipelines to meet your speed, cost, and performance requirements.

We accomplish all this while meeting enterprise security and compliance standards across your entire pipeline, with cutting-edge features like contextual chunking, custom prompting for metadata enrichment, and VLM prompts optimized for each new model we integrate. Effortless ETL. Automatically. Continuously. Securely.

Unstructured API: Full Feature Parity At Your Fingertips

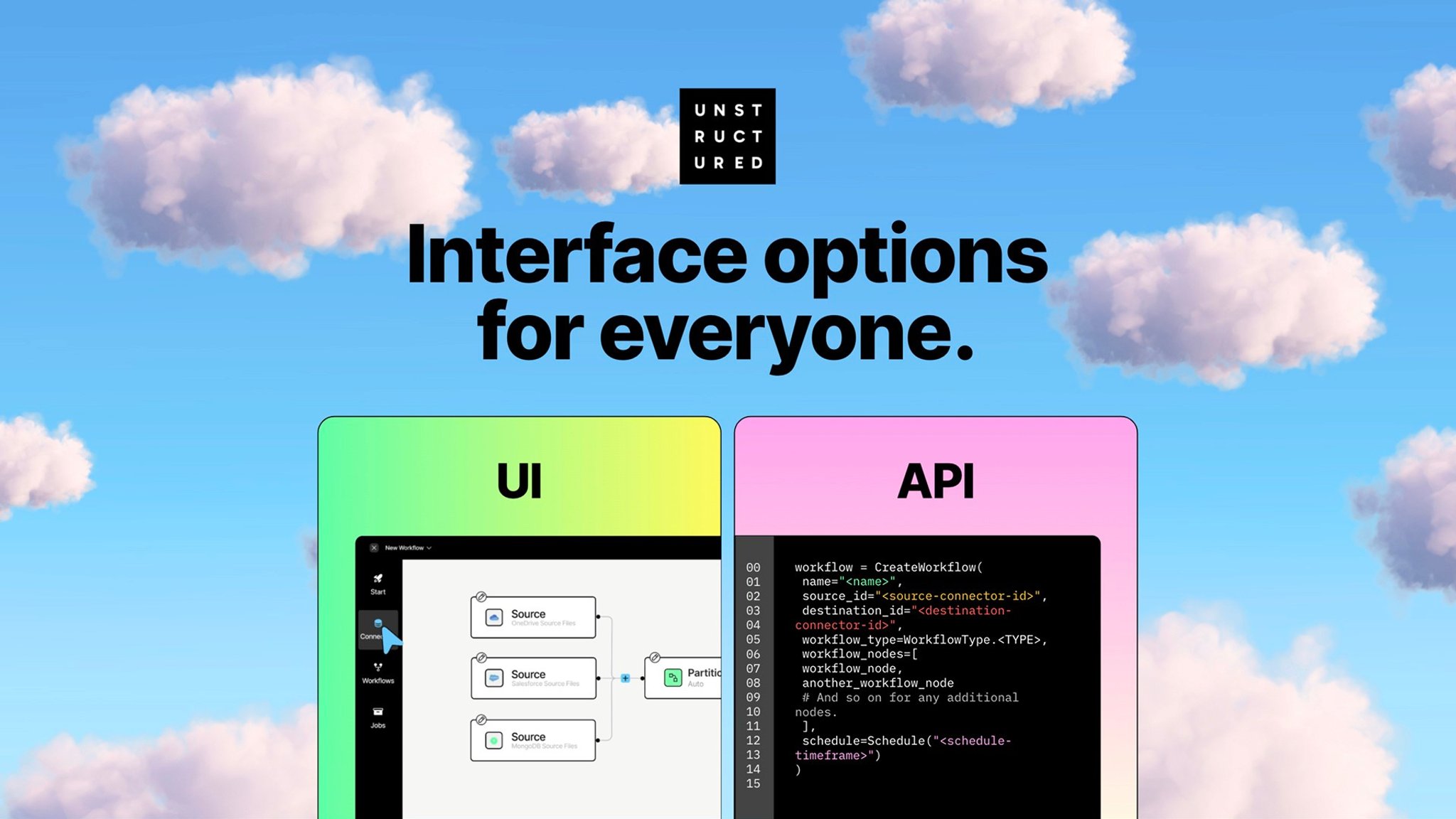

We are excited to announce the full release of Unstructured API, which now offers complete feature parity with the Unstructured UI capabilities. Anything you can do in Unstructured UI, you can now do programmatically. This means you can:

- Connect to where your data lives and where you want it to land

- Set up complete workflows with all the partition, chunking, enrichment, and embedding options

- Create quick prototypes by partitioning local files via VLM, and customize your workflow from there

There are two ways to use Unstructured API: via the "Unstructured Workflow Endpoint" or "Unstructured Partition Endpoint." All of this is accessible through our brand new Python SDK.

1️⃣ Unstructured Workflow Endpoint

This endpoint allows you to create end-to-end production workflows by configuring nodes (such as sources, destinations, chunking, embedding, custom prompts, etc.) programmatically. You can do all the same things exactly as you would do with the Unstructured UI, but with code.

Watch the video:

2️⃣ Unstructured Partition Endpoint

This endpoint allows you to locally send a file to one of our partition strategies: Auto, VLM, Fast, or Hi_Res. It's perfect for rapid prototyping of Unstructured's partitioning strategies and has been enhanced from our previous API functionality with the addition of VLM partitioning.

Watch the video:

Unstructured API: The Foundation for MCP Servers

One of the most exciting capabilities of Unstructured API is that it enables developers to build Model Context Protocol (MCP) servers for seamless LLM integration with your unstructured data.

MCP (Model Context Protocol) is an open protocol that standardizes how applications provide context to LLMs. From MCP official docs: think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

The MCP ecosystem primarily consists of:

- MCP Hosts: Programs like Claude Desktop, IDEs, or AI tools that want to access data, or tools through MCP

- MCP Clients: Protocol clients that maintain 1:1 connections with servers

- MCP Servers: Lightweight programs that each expose specific capabilities through the standardized Model Context Protocol

Building MCP Servers Over Unstructured API

Over the past couple of days our team has been building MCP servers to solve to showcase the potential of integrating Unstructured API with LLMs:

- Enhanced Document Processing for Claude Desktop This MCP server dramatically expands Claude Desktop's document processing capabilities. While Claude Desktop has native support for some file types, this server removes those limitations by leveraging Unstructured's powerful document processing capabilities. Now, Claude Desktop can extract and use content from 60+ unstructured document types, regardless of complexity or format. Imagine being able to upload financial reports with complex tables, legal contracts with intricate layouts, or emails—and having Claude immediately understand and work with that content. GitHub repo.

https://x.com/mariaKhalusova/status/1899531478637465810

- Job and Workflow Status Monitor This MCP server enables LLMs to check the status of jobs and workflows on Unstructured in real-time through natural language queries. Users can simply ask, "How is my document processing job progressing?" or "What's the status of my workflow that started this morning?" and get immediate, accurate responses. This integration creates a seamless experience where complex ETL processes can be monitored and managed through simple conversations.

https://x.com/mariaKhalusova/status/1899480432762142821

- End-to-End RAG Pipeline Integration Perhaps most impressive is our MCP server that connects Unstructured API with Astra DB to create a complete RAG (Retrieval-Augmented Generation) pipeline. This server transforms documents, chunks them appropriately, and ingests them into a vector database—all through a simple chat interface. Users can simply ask, "Please process this annual report and make it available for my RAG application," and the server handles the entire process end-to-end. https://x.com/UnstructuredIO/status/1899545583792128384

These examples demonstrate the immense potential of combining Unstructured API with MCP. By building MCP servers on top of Unstructured API, you can create powerful, user-friendly interfaces that make complex document processing and ETL workflows accessible through natural language. We would love to see what MCP servers you build on top of Unstructured API. Whether you're looking to enhance document processing capabilities in your applications, create custom workflows triggered by natural language, or build intelligent agents that can work with unstructured data, our API provides the foundation you need!

On top of that, we're working on releasing an official Unstructured MCP server that will allow you and your LLM agents to use all of Unstructured's functionality over the MCP protocol. Stay tuned!

Getting Started

For Serverless API Users

You have several new features available to you, while nothing has changed for your current implementation of Serverless API! In addition to fast and hi-res, we have two new strategies available for you:

1️⃣ VLM - our most performant partition strategy, available for 14 models today and more being added every week. We have optimized the prompts for each model we have added to bring you the best partitioning available today.

2️⃣ Auto - This strategy automatically routes documents, page by page, to the optimal strategy for that page. VLMs are the most performant partition strategy, but they are more expensive and slower, and are overkill for simpler, text-only pages of an otherwise complex document. The auto strategy will route any pages that require VLM, but decrease cost and increase speed while maintaining high fidelity partitioning.

If you want to try the additional features available in the API via the Workflow Endpoint, read on!

For New API Users

Unstructured Workflow Endpoint

This endpoint is for users to set up an end-to-end production workflow. Check out the videos below on how to set up your connectors and run your workflows via Python SDK:

Part 1: Set Up Your Connectors

1 - Set up your credentials2 - Create & validate a source connector3 - Create & validate a destination connector

Part 2: Set Up and Run Your Workflow

4 - Set up a custom workflow, setting your partition, chunker, and embedder nodes5 - Run your custom workflow

Or try these steps yourself with the notebook we demonstrated in the video! You can also read the documentation for more details that we haven’t covered in this blog.

Unstructured Partition Endpoint

This endpoint is for users to quickly prototype with our partition strategies before building an end-to-end production workflow using the Unstructured Workflow endpoint. Check out this video of how a custom workflow is created:

Unstructured API: How to use a VLM to partition a local file

- Set up your credentials

- Use an Anthropic VLM to partition a PDF file: a financial report with images and tables

- Review the results of the partition

- Use a OpenAI VLM to partition the same PDF file

- Review the results of the partition

- Use a OpenAI VLM to partition an image file: a financial chart

Or try these steps yourself with the notebook we demonstrated in the video! You can also read the documentation for more details that we haven’t covered in this blog.

Unstructured Python SDK

We developed an easy-to-use SDK that you can access to integrate Unstructured throughout your software. You can reference the SDK pypi or documentation for more info than we have covered in the demo for creating a workflow using the SDK above.

How it works under the hood

Unstructured API offers the same features as Unstructured UI: transformation and enrichment, connection and orchestration, and enterprise features. You can read more about these features in our Platform announcement blog, or read about our specific enterprise features in our blog about production-ready ETL features.

How to get access to Unstructured API

To start transforming your data with Unstructured, contact us to get access—or log in if you're already a user. From there, you can either use the UI or follow these steps to access your API key to use Unstructured programmatically.

What’s next?

We're excited to share a preview of our next feature coming in March: Playground. This feature will allow users to upload a file and see how their data changes with each node. We'll update the Unstructured API with the Playground features to allow users to locally test a file and build an end-to-end workflow programmatically.

You can also look forward to the official Unstructured MCP server implementation to integrate with your LLM agents.

FAQ

Q: How is Unstructured API different from the previous API? A: Unstructured API offers 2 endpoints: Workflow, which can build a full workflow, and Partition, which includes the prior functionality plus adds advanced VLM capabilities.

Q: What happens to the current API? A: You can keep everything exactly the same and everything will work as is. You can now also update your partition strategy to VLM if that better suits your needs, or leverage the Auto strategy to let us select the right strategy for your documents, page by page.

Q: What kinds of documents can I process with the API? A: Check out the full list of supported document types in our documentation.

Q: How do I know my data is secure? A: We're SOC 2 Type 2 certified, HIPAA compliant, and GDPR-ready. We also offer in-VPC deployment for complete data sovereignty.

Q: What if I need help getting started? A: No problem! We are here to help. Drop us a note here and we'll get in touch.