Authors

Ever found yourself wrestling with complex enterprise data spread across the entire organization trying to deliver it into Snowflake to enable a RAG system? You're not alone.

Today, we’re excited to announce that the Unstructured Platform now integrates directly with Snowflake, making it easier than ever to unlock your enterprise data for RAG applications.

This integration enables bidirectional data flow, allowing you to:

- Pull documents from any enterprise data platform, process them with Unstructured's enterprise-grade document transformation workflows, and write the structured results to Snowflake, or

- Ingest data from Snowflake tables to preprocess it in a uniform manner alongside with data from other sources to enable a RAG application over a mix of structured and unstructured data.

Why Snowflake?

Snowflake is a cloud-based data platform that provides comprehensive tools for data warehousing, engineering, sharing, science, and analytics. Its architecture separates storage and compute, enabling scalable and concurrent data processing. Data teams particularly value Snowflake for its near-zero management overhead, automatic scaling, robust security features, and powerful data sharing capabilities.

How Does the Integration Work?

The Snowflake integration in Unstructured Platform is enabled by a source and a destination connector. When Snowflake is used as a source, Unstructured Platform retrieves document content and metadata from designated Snowflake tables. It allows batch processing with adjustable sizes, supports selective column retrieval, and ensures document versioning by tracking unique IDs. As a destination, Snowflake is used to store results of unstructured data processing with a standardized schema.

Setting Up the Integration

We have integrated multiple videos into our documentation to help you learn how to obtain necessary credentials, configure roles, and find connection details. Check them out to get started easily:

- Snowflake source connector docs

- Snowflake destination connector docs

Source Connector Configuration

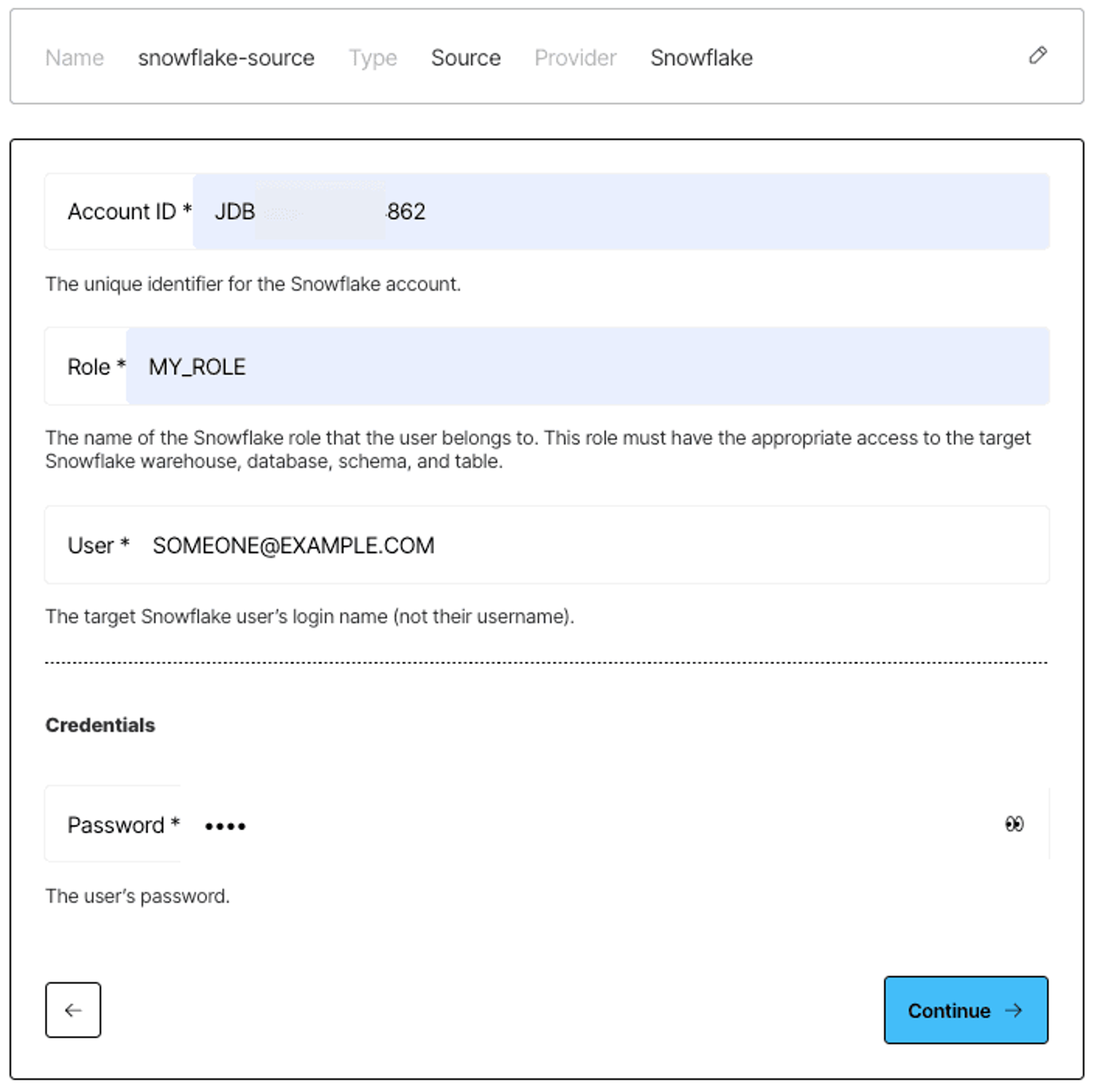

Getting your Snowflake source connector up and running in the Unstructured Platform is a straightforward process once you’ve obtained all of the necessary credentials. Just follow these steps:

1) Head over to the Connectors section in the Platform UI, click Sources, then New.

2) Select Snowflake as the provider and give it a name.

3) Configure the required fields:

- Account ID: Your Snowflake account identifier

- Role: The name of the Snowflake role that the user belongs to. This role must have the appropriate access to the target Snowflake warehouse, database, schema, and table.

- User/Password: The target Snowflake user’s login name (not their username) and their password.

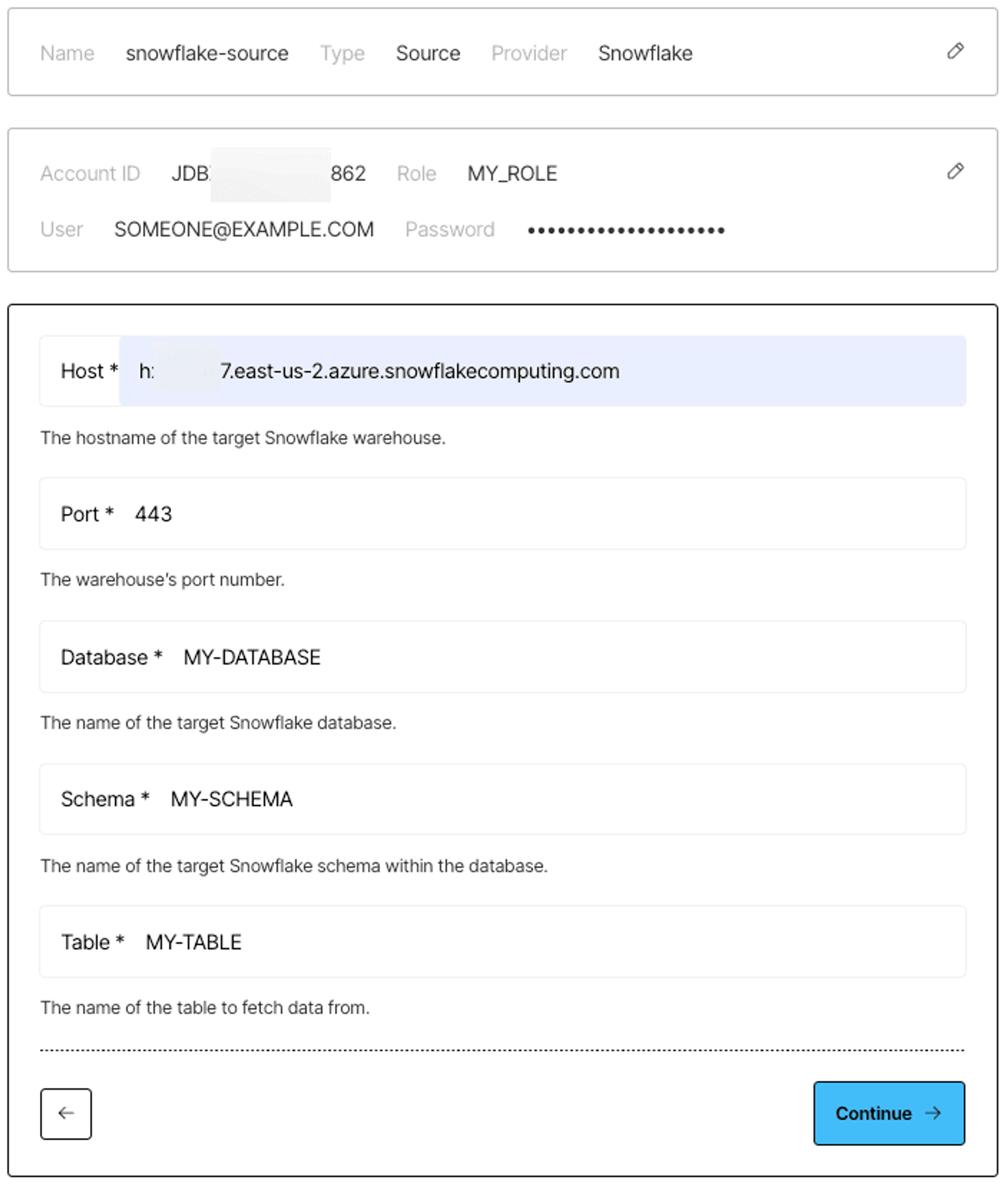

- Host/Port: Snowflake warehouse’s hostname and port.

- Database: The target database’s name.

- Schema: The name of the target Snowflake schema within the database.

- Table: The name of the target Snowflake table within the database’s schema.

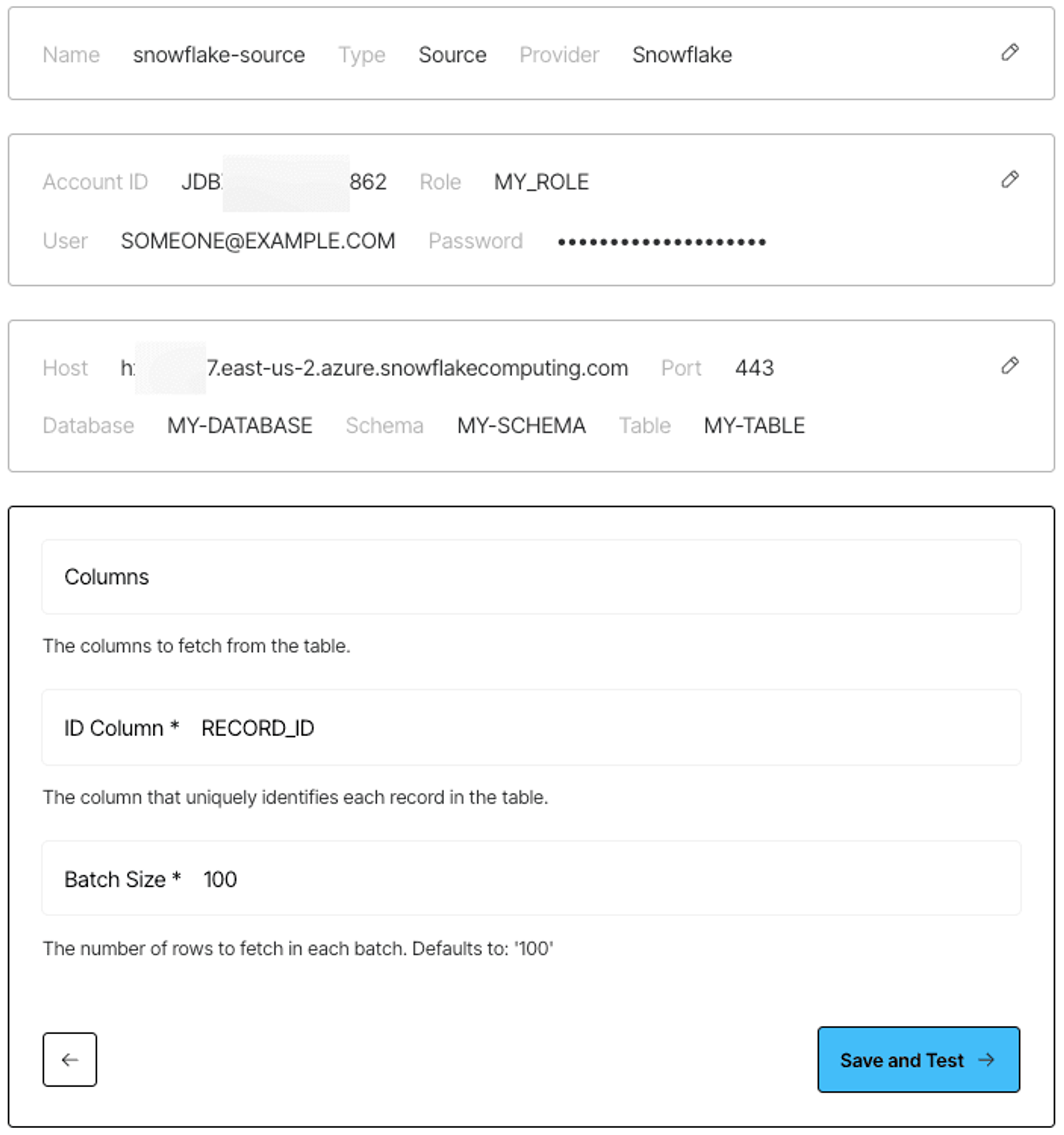

- Columns: A comma-separated list of columns to fetch from the table. By default, all columns are fetched unless otherwise specified.

- ID Column: The name of the column that uniquely identifies each record in the table.

- Batch Size: The maximum number of rows to fetch for each batch. The default is 50 if not otherwise specified.

Sample Snowflake source connector configuration:

Once you've filled everything out, save your configuration. Unstructured Platform will test the connection and let you know when it’s ready to start pulling data from Snowflake!

If you prefer to use Unstructured Platform as an API, here’s how you create a Snowflake source connector programmatically:

You can find the schema example and other helpful details in the documentation.

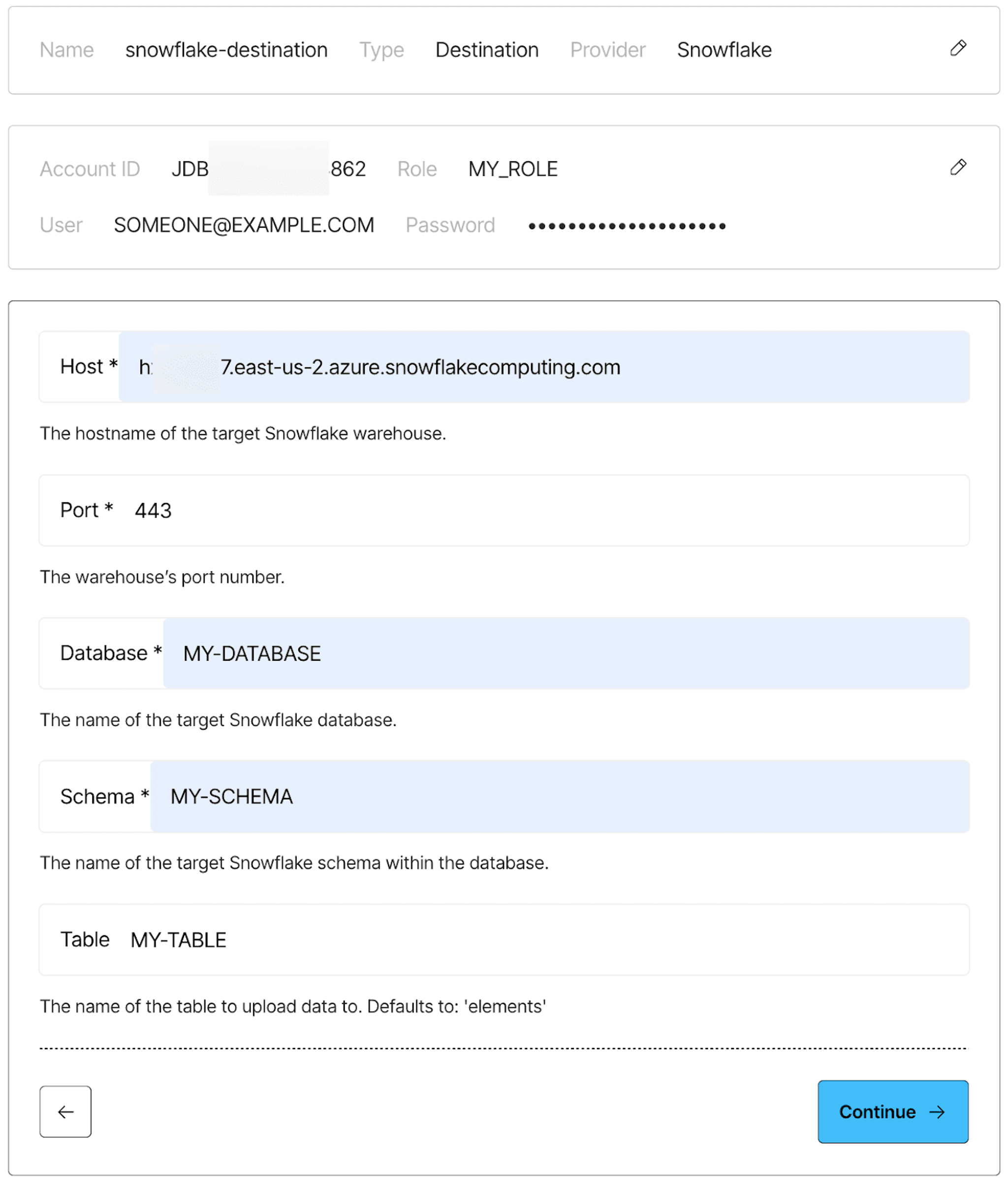

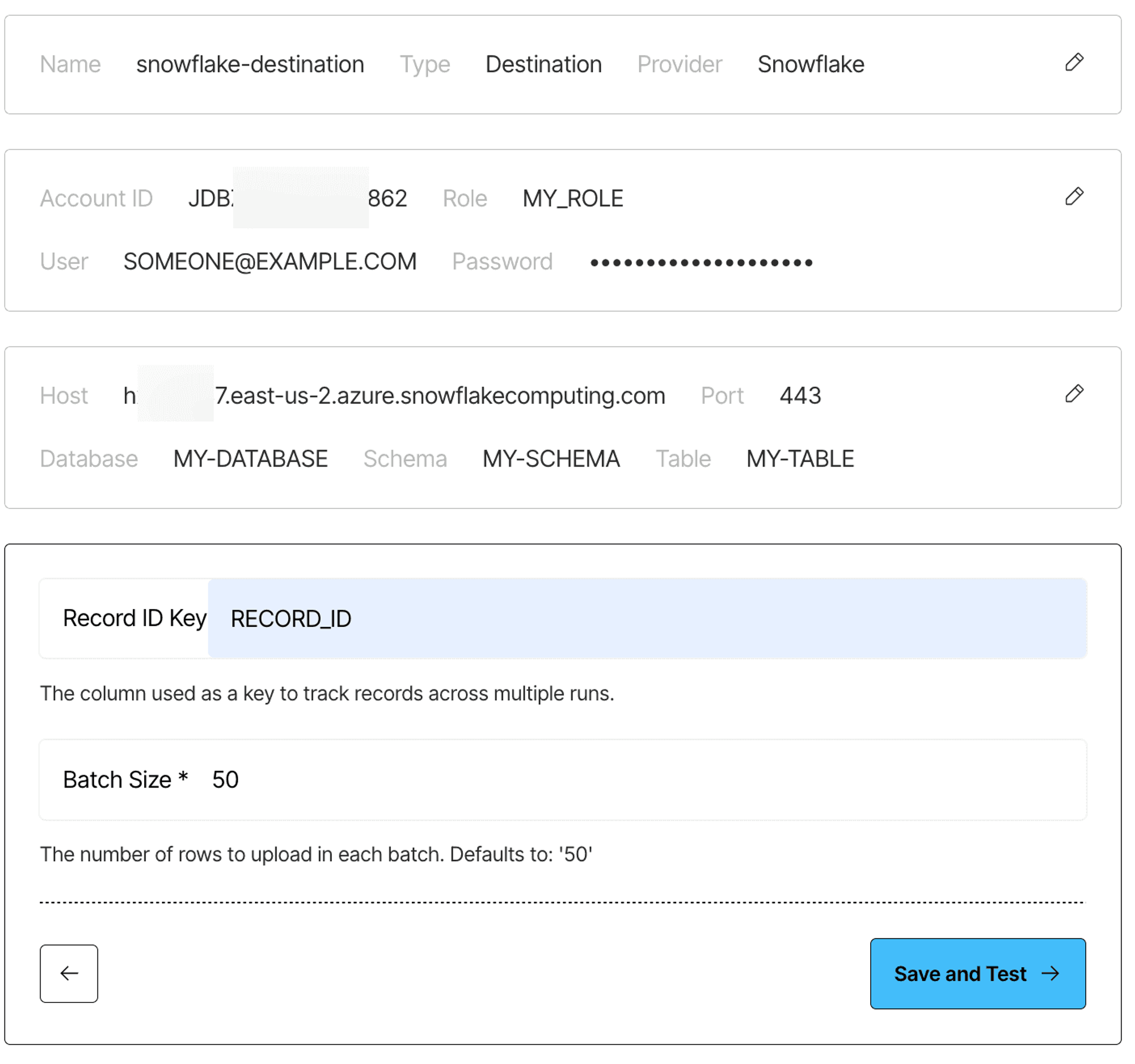

Destination Connector Configuration

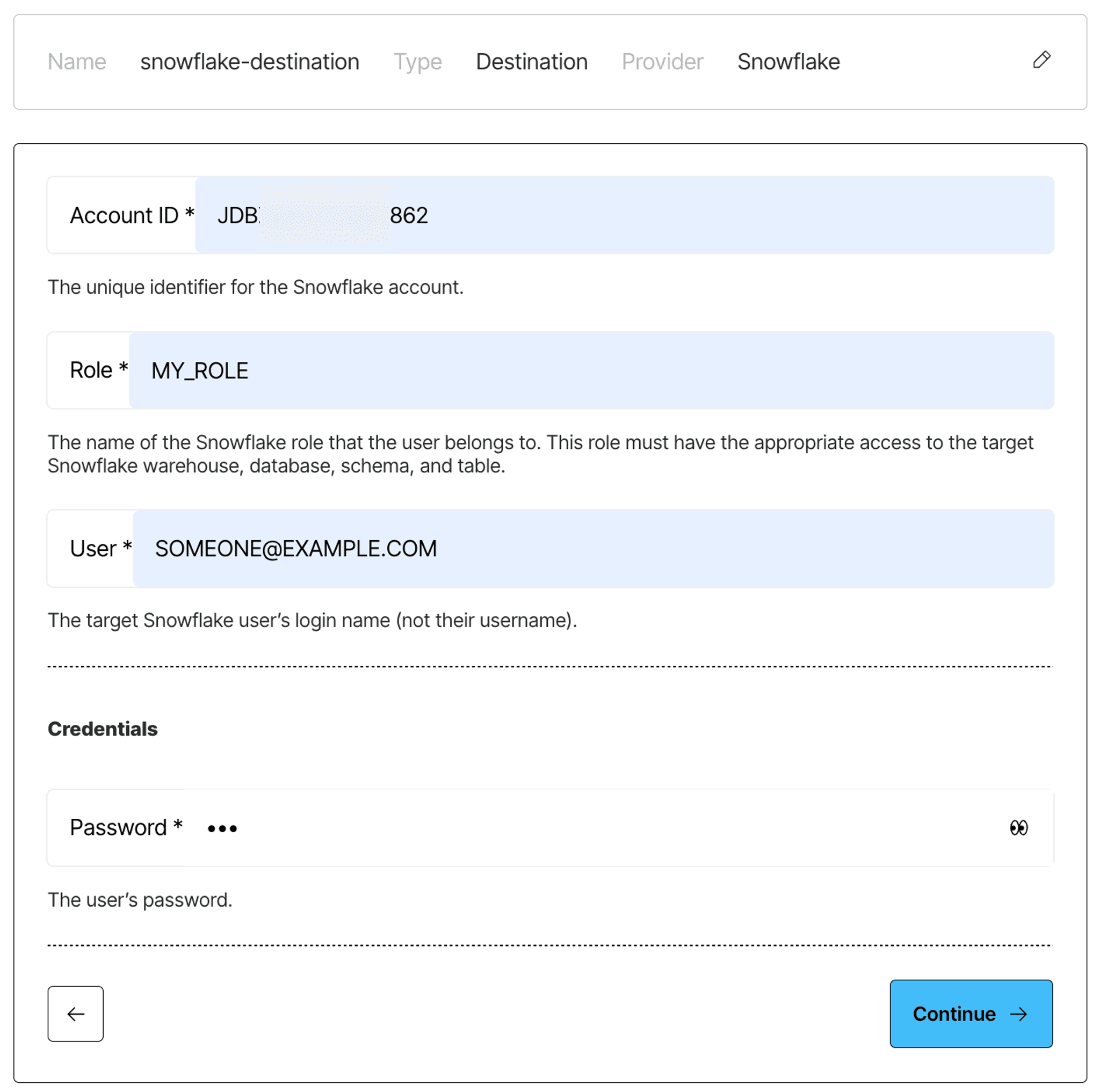

Configuring the Snowflake destination connector is just as straightforward.

1) Navigate to the Connectors section in Platform UI, click Destinations, then New.

2) Select Snowflake as the provider and give your connector a name.

3) Configure the following required fields:

- Account ID: The target Snowflake account’s identifier

- Role: The name of the Snowflake role that the user belongs to. This role must have the appropriate access to the target Snowflake warehouse, database, schema, and table.

- User/Password: The target Snowflake user’s login name and their password.

- Host/Port: The hostname/port of the target Snowflake warehouse.

- Database: The name of the target Snowflake database.

- Schema: The name of the target Snowflake schema within the database.

- Record ID Key: The name of the column that uniquely identifies each record in the table. The default is record_id if not otherwise specified.

- Batch Size: The maximum number of rows to fetch for each batch. The default is 50 if not otherwise specified.

Sample Snowflake destination connector configuration:

If you prefer to use Unstructured Platform as an API, here’s how you create a Snowflake destination connector programmatically:

Get started!

If you're already an Unstructured Platform user, the Snowflake integration is available in your dashboard today!

Expert access

Need a tailored setup for your specific use case? Our engineering team is available to help optimize your implementation. Book a consultation session to discuss your requirements here.