Authors

Most RAG pipelines start with the same assumption: you need vector embeddings to do anything useful.

It's easy to see why. Embedding models offer a clean path to semantic search, and when your queries are abstract or your documents are messy, vector similarity can cover a lot of ground. But there are tradeoffs, embedding adds latency, setup complexity, and often a layer of opacity when it comes to understanding why a specific chunk was retrieved.

That led us to try something simpler.

Instead of generating embeddings, we built a pipeline around BM25 — the ranking function behind keyword-based search in Elasticsearch. BM25 doesn’t “understand” meaning the way a dense vector model does, but it scores documents based on how well their terms match the query, with tunable weighting for frequency, rarity, and length.

In practice, BM25 works well when your content is structured and your queries are specific. It can retrieve exactly what you’re looking for, without any semantic model in the loop. And when it doesn’t work, it fails in ways that are easy to diagnose — usually because the terms don’t align.

This blog walks through what that looks like. We’ll process a batch of documents using Unstructured, index the output into Elasticsearch Serverless, and use BM25 retrieval to power a lightweight, embedding-free RAG system.

We’ll show where this setup works well, where it can fall short, and what those tradeoffs look like in practice.

You can find the full notebook here.

Prerequisites

This pipeline relies on four external systems. Each one requires a minimal set of credentials that you’ll need to set up ahead of time.

Unstructured

To start transforming your documents with Unstructured, you’ll need an API key. Contact us to get set up — we’ll get you provisioned with access and walk you through the next steps.

AWS S3

This is where your source documents will live. You’ll need:

- An access key ID

- A secret access key

- The full S3 path to your source location, including bucket and optional prefix

- Some files of any type 🙂. Check out our supported file types here.

These are used by Unstructured to connect to S3 and pull the raw documents into the workflow.

Elasticsearch

We’re using Elasticsearch to store the output chunks and handle keyword-based search with BM25. To connect to your Serverless deployment, you’ll need:

- The host URL of your deployment

- An API key

- The name of the index where you want to store content

You can create a Serverless project at https://cloud.elastic.co, and generate an API key from the “Security” section of your project settings.

This pipeline also uses an OpenAI model at query time to generate answers based on retrieved context. Any GPT-4-capable key will work. You’ll just need to set:

- Your OpenAI API key (OPENAI_API_KEY)

Connecting the pipeline

Unstructured uses the concept of connectors to move data between systems. Each connector defines either a source — where documents come from — or a destination — where processed results get stored. Once both ends are in place, we can wire them together into a workflow.

We start by initializing a source connector that points to an S3 bucket. This tells Unstructured where to look for incoming documents. The connector includes AWS access keys, the S3 path, and a flag that controls whether to pull files recursively through subdirectories. Once created, the connector is assigned a unique ID that we’ll reference in the workflow.

Next, we create a destination connector that points to Elasticsearch Serverless. This connector needs the host URL, index name, and API key. Once it’s live, Unstructured can push content directly into the specified Elasticsearch index after processing.

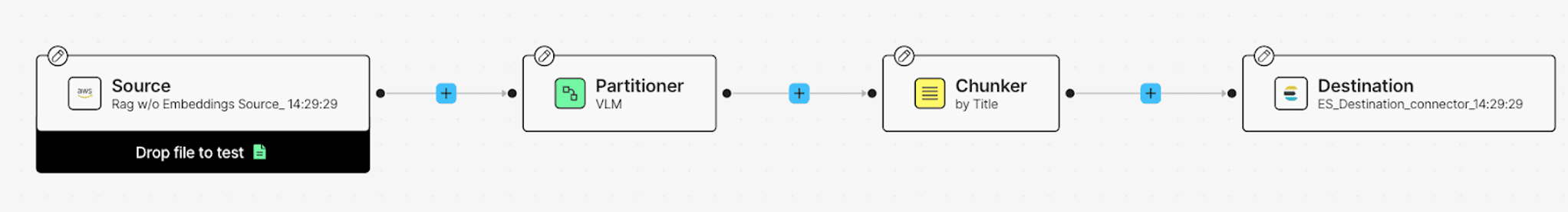

With both connectors in place, we define the workflow. This one is intentionally minimal: we include a partitioner node to extract structured elements from the documents, and a chunker node to break them into smaller, retrievable units. We skip embedding entirely. no vector generation, no similarity model, just clean content ready for indexing.

Each node can be configured. The partitioner uses Claude 3.7 Sonnet to detect semantic elements, and the chunker is tuned with a max character limit and an overlap value to maintain context across boundaries. We give the workflow a name, wire in the source and destination IDs, and launch it.

Once the workflow is running, it handles everything else. Files are pulled from S3, processed through the pipeline, and indexed into Elasticsearch.

Processing the batch

Once the workflow is triggered, Unstructured spins up a background job to process the documents. You can monitor progress using the job ID returned from the initial launch. In our notebook, we use a simple polling loop that checks the job status every 30 seconds until it completes.

While the job is running, Unstructured is pulling files from S3, extracting structured elements, chunking them, and writing the output directly into your Elasticsearch index. Each chunk is indexed as a standalone document, with metadata that includes its source file, position, and any detected structure (like section titles or headers).

Once the job status flips to COMPLETED, you’re done. The index is now populated with clean, retrievable chunks of content.

This is the point where most of the heavy lifting ends. The documents have been processed, indexed, and made searchable. Everything downstream from here is retrieval and generation.

Retrieving with BM25

With the documents indexed, we can now query Elasticsearch using BM25. BM25 is a keyword-based scoring algorithm that ranks documents based on how well their content matches a given query. It takes into account term frequency, document rarity, and length normalization to return the most relevant chunks.

We define a retrieval strategy in LangChain using the BM25Strategy class and pass in the Elasticsearch client along with the index name. This gives us a search interface that runs fast, returns full chunks, and doesn’t require any embedding vectors.

BM25 can be tuned, though in our case we used the default settings:

- k1 = 1.2: controls how much term frequency affects the score

- b = 0.75: controls how much document length matters

Higher k1 values give more weight to repeated terms. Lower b values reduce the impact of document length. For most structured or operational content, the defaults work well.

When a user submits a query, we run similarity_search() through LangChain. It hits Elasticsearch, retrieves the top k matching chunks using BM25, and assembles them into a single block of context.

That context is passed into an OpenAI model via a prompt template. The model then generates a response using only the retrieved content as input.

This setup is fast and easy to debug. You can inspect exactly which chunks were returned and see how well they match the query. And since there are no vectors involved, you don’t run into opaque similarity scores or unpredictable semantic drift.

This works surprisingly well — until it doesn’t.

What BM25 gets right, and where it slips

We tested the setup with a set of operational cybersecurity documents, including a federal playbook on incident response. Here’s how two example queries performed.

Query:“What are the containment procedures?”

This query is sharp and literal. BM25 returned several chunks that directly reference “containment,” “mitigation,” and “response strategies.” The top-ranked chunk listed step-by-step instructions for system isolation, network-level blocking, and credential resets.

The LLM generated a clean summary of those steps. No hallucinations, no missed context. This is the kind of query BM25 excels at — the language is well-aligned with how the information appears in the documents.

Query:“Where in the document does it describe coordination between the SOC and executive leadership?”

This one didn’t go as well. None of the top-ranked chunks mentioned both “SOC” and “executive leadership” explicitly. A few had related phrases — like “agency IT leadership” and “declare incident” — but the connection wasn’t clear.

The model still returned an answer. It sounded fluent, even plausible, citing sections about communication procedures and incident declaration. But the answer wasn’t grounded in an exact retrieved passage. It was inferred stitched together from loosely related fragments.

This is where BM25 struggles. Without semantic awareness, it can’t bridge phrasing gaps. It only sees the words you give it, and retrieves what matches.

When keyword search is the right tool

BM25 doesn’t try to understand what you mean, it just matches what you say. That’s both its strength and its ceiling.

It’s a great fit when your documents are well-structured and your queries are sharp. Think policy manuals, incident response playbooks, vendor security docs, places where the language is controlled, the format is consistent, and users are likely to ask questions using the same words that appear in the source.

This setup also works well when speed and simplicity matter. There’s no embedding step, no vector store to manage, and no risk of fuzzy semantic drift. Retrieval is fast, repeatable, and easy to debug.

But BM25 doesn’t handle abstraction. If the user asks a question with different phrasing than what’s in the document, it might miss entirely. And when that happens, the model downstream is forced to guess or worse, hallucinate.

This isn’t a flaw in BM25. It’s a signal that you’ve outgrown what keyword search can offer.

The good news is you don’t need to start over. You can keep the same pipeline, the same documents, and add a hybrid strategy, one that blends exact keyword matches with semantic retrieval from embeddings.

But even without that, this baseline setup can take you surprisingly far. For internal tools, knowledge bases, or early-stage RAG prototypes, BM25 is often more than enough.

Where this takes you next

We built a RAG pipeline with just structured parsing, keyword-based retrieval, and a language model to answer questions.

It worked well when the queries were sharp and the documents used consistent language. It faltered when the phrasing didn’t match not because the model couldn’t reason, but because BM25 didn’t return the right input in the first place.

That limitation is easy to fix. You can keep everything we’ve shown here, the workflows, the connectors, the document structure, and layer on an embedding step. Unstructured supports it out of the box. Elasticsearch supports hybrid search. LangChain can combine the two. Nothing breaks.

But what we hope this walkthrough shows is that you don’t always need vectors to get started. Sometimes, classic retrieval is the right baseline especially when your data is clean, your queries are literal, and your priorities are speed and simplicity.

If you're exploring RAG pipelines in production or want to simplify how you process and index unstructured content, we can help. Get in touch to get onboarded to Unstructured. For enterprises with more complex needs, we offer tailored solutions and white-glove support. Contact us to see how Unstructured can fit your architecture