Authors

What is ONNX?

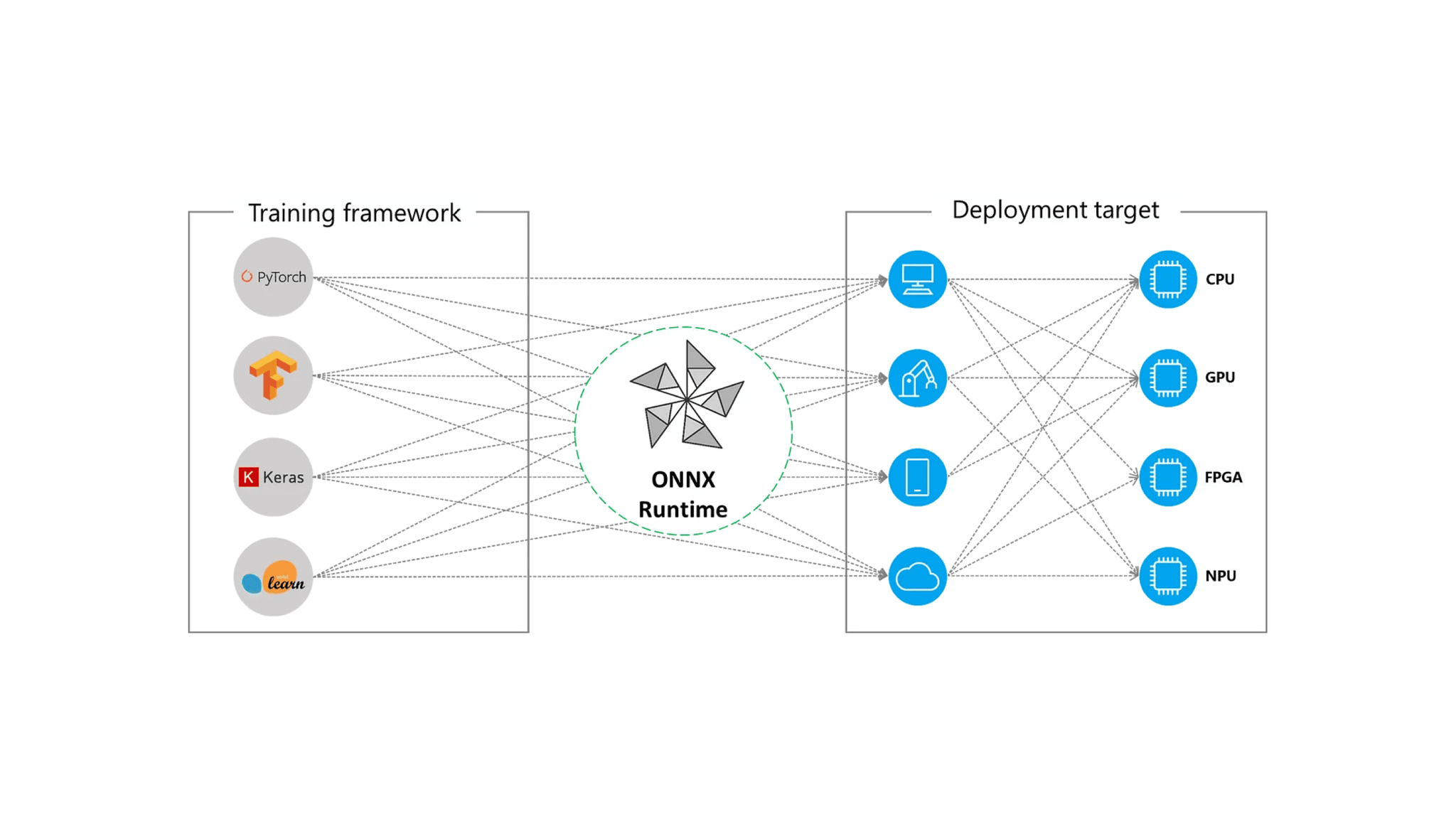

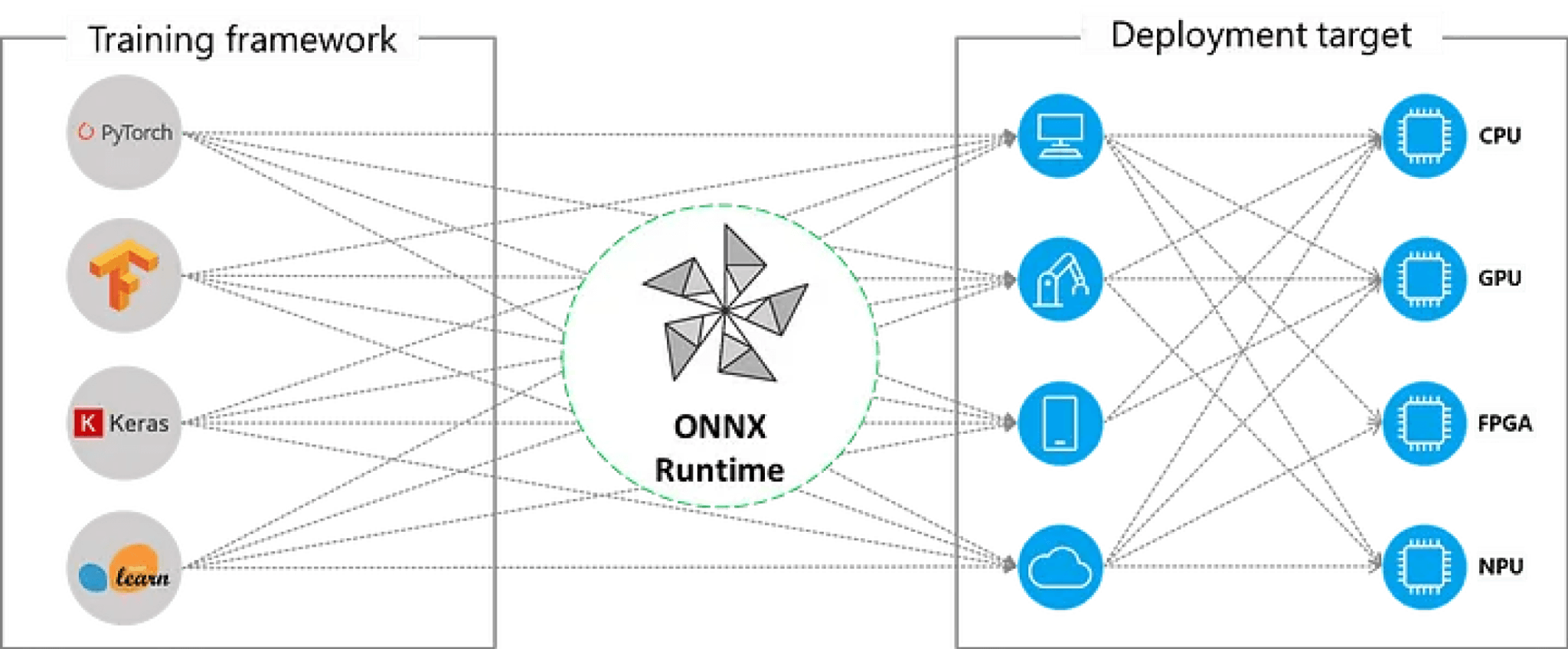

ONNX is a standard that allows the interoperability of machine learning models by defining common operators. Essentially: it takes the model defined in your favorite tool (Pytorch, Tensorflow, Keras, Scikit-learn) and rewrites it using these standardized operators, allowing you to use it with any other tool to run or even train it.

source: https://onnxruntime.ai/docs/execution-providers/

In this way, you can define a model in any framework, train it using the tools you already know and share it with others for further development or put it directly on an inference server. Having a model in a standardized and publicly available format has an additional advantage: it is possible to make additional optimizations in the target architecture, taking better advantage of the computing capabilities of the target machine with very few changes by the developers.

Exporting Detectron2

Once the tool was located, we got to work and found that in the Detectron2 repository there was already a script to export the models, we only needed the YAML file with the model definition which (layoutparser kindly made available here) and to run the program. The result is a file that we can use in the ONNX runtime environment and that we can easily send to CPU, GPU or even dedicated hardware.

The Detectron2 model exported to ONNX is available in the Unstructured library starting with version 0.7.0. If you had problems with the installation before, upgrading Unstructured will likely solve your issues.

So far we have only exported the same model that we had before, same parameters, same results, with fewer dependencies in the library… is it possible to do something more? Yes, but that’s a topic for another blog post, stay tuned ;)