Authors

Why is Chunking Necessary?

Chunking is an essential preprocessing step when preparing data for RAG for a number of reasons.

Context Window Limit

Let’s start with the basics. The retrieved chunks will be fed directly into the prompt as context for your LLM to generate a response. This means that at the very minimum, the total length of all of the retrieved chunks combined cannot exceed the context window of the LLM. Though many LLMs today have generous context windows, you still may not want to fill up the context window to the brim, as these LLMs will struggle with the “needle in the haystack” problem. You may also want to utilize that large context window in additional ways, such as providing thorough instructions, a persona description, or some few-shot examples.

Additionally, if you intend to employ similarity search and embed your documents, you have to consider that embedding models also have a limited context window. These models cannot embed text that surpasses the maximum length of their context window. This limit varies depending on the specific model, but you can easily find this information in the model’s description, e.g. in the model card on the Hugging Face Hub. Once you know which model you will be using to generate the embeddings, you have the hard maximum value for the chunk length (expressed in tokens, not characters or words). Embedding models typically max out at around 8K tokens or less in context window size, which equates to roughly 6200 words in the English language. To put this in perspective, the entire Lord of the Rings series, including “The Hobbit”, clocks in at approximately 576,459 words, so if you wanted to utilize this corpus for RAG with similarity search, you'd need to divide it into at least 93 chunks.

The Effect of Chunk Size on Retrieval Precision

While the embedding model imposes a hard maximum limit on the number of tokens it can embed, that doesn't mean your chunks need to reach that length. It simply means they can't exceed it. In fact, utilizing the maximum length for each chunk, such as 6200 words (8K tokens), may be excessive in many scenarios. There are several compelling reasons to opt for smaller chunks.

Let’s take a step back for a minute, and recall what happens when we embed a piece of text to get the embedding vector. Most embedding models are encoder-type transformer models that take as input text up to the maximum length, and give you back a single vector representation of fixed dimension, for example 768. Regardless of whether you give a model a sentence of 10 words or a paragraph of 1000 words, both resulting embedding vectors will have the same dimension of 768. The way this works is that the model first converts the text into tokens, for each of these tokens it has learned a vector representation during pre-training. It then applies a pooling step to average individual token representations into a single vector representation.

Common types of pooling are:

- CLS pooling: vector representation of the special [CLS] token becomes the representation for the whole sequence

- Mean pooling: the average of token vector representations is returned as the representation for the whole sequence

- Max pooling: the token vector representation with the largest values becomes the representation for the whole sequence

The goal is to compress the granular token-level representations into a single fixed-length representation that encapsulates the meaning of the entire input sequence. This compression is inherently lossy. With larger chunks, the representation may become overly coarse, potentially obscuring important details. To ensure precise retrieval, it's crucial for chunks to possess meaningful and nuanced representations of the text.

Now, consider another potential issue. A large chunk may encompass multiple topics, some of which may be relevant to a user query, while others are not. In such a case, the representation of each topic within a single vector can become diluted, which again will affect the retrieval precision.

On the other hand, smaller chunks that maintain a focused context, allow for more precise matching and retrieval of relevant information. By breaking down documents into meaningful segments, the retriever can more accurately locate specific passages or facts, which ultimately improves RAG performance. So, how small can chunks be while still retaining their contextual integrity? This will depend on the nature of your documents, and may require some experimentation. Typically, a chunk size of about 250 tokens, equivalent to approximately 1000 characters, is a sensible starting point for experimentation.

Now that we've covered the reasons for chunking, let's look at some practical aspects of it.

Common Approaches to Chunking

Character splitting

The very basic way to split a large document into smaller chunks is to divide the text into N-character sized chunks. Often in this case, you would also specify a certain number of characters that should overlap between consecutive chunks. This somewhat reduces the likelihood of sentences or ideas being abruptly cut off at the boundary between two adjacent chunks. However, as you can imagine, even with overlap, a fixed character count per chunk, coupled with a fixed overlap window, will inevitably lead to disruptions in the flow of information, mixing of disparate topics, and even sentences being split in the middle of a word. The character splitting approach has absolutely no regard for document structure.

Sentence-level chunking or recursive chunking

Character splitting is a simplistic approach that doesn’t take into account the structure of a document at all. By relying solely on a fixed character count, this method often results in sentences being split mid-way or even mid-word, which is not great.

One way to address this problem is to use a recursive chunking method that helps to preserve individual sentences. With this method you can specify an ordered list of separators to guide the splitting process. For example, here are some commonly used separators:

- "\n\n" - Double new line, commonly indicating paragraph breaks

- "\n" - Single new line

- "." - Period

- " " - Space

If we apply the separators listed above in their specified order, the process will go like this. First, recursive chunking will break down the document at every occurrence of a double new line ("\n\n"). Then, if these resulting segments still exceed the desired chunk size, it will further break them down at new lines ("\n" ), and so on.

While this method significantly reduces the likelihood of sentences being cut off mid-word, it still falls short of capturing the complex document structure. Documents often contain a variety of elements, such as paragraphs, section headers, footers, lists, tables, and more, all of which contribute to their overall organization. However, the recursive chunking approach outlined above primarily considers paragraphs and sentences, neglecting other structural nuances.

Moreover, documents are stored in many native formats, so you would have to come up with different sets of separators for each different document type. The list above may work fine for plain text, but you’ll need a more nuanced and tailored list of separators for markdown, another list if you have HTML or XML document, and so on. Extending this approach to handle image-based documents like PDFs and PowerPoint presentations introduces further complexities. If your use case involves a diverse set of unstructured documents, uniformly applying recursive chunking quickly becomes a non-trivial task.

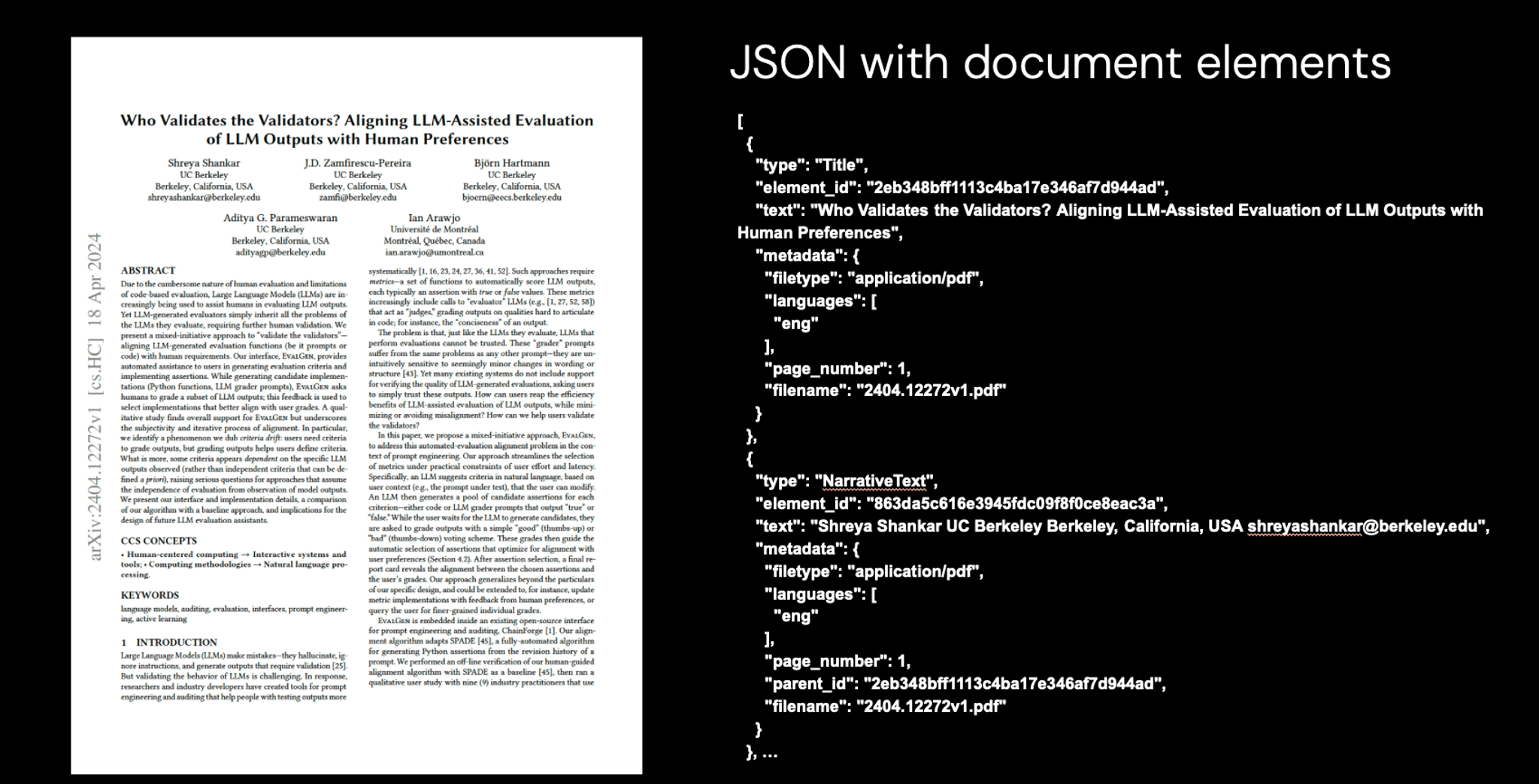

Smart chunking with Unstructured

Unstructured offers several smart chunking strategies, all of which have a significant advantage over the previously mentioned approaches. Instead of dealing with a wall of plain text with a random set of potential separators, once you partition the documents of any kind with Unstructured, chunking is applied to a set of individual document elements that represent logical units of the original document and reflect its structure.

This means that you don’t have to figure out how to tell apart individual sections of a document. Unstructured has already done the heavy lifting, presenting you with distinct document elements that encapsulate paragraphs, tables, images, code snippets, and any other meaningful text units within the document. Coming out of the partitioning step, the document is already divided into smaller segments. Does this mean that the document is already chunked? Not quite, but you're halfway there!

Some of the document elements derived from partitioning may still exceed the context window of your embedding model or your desired chunk size. These will require further splitting. Conversely, some document elements may be too small to contain enough context. For instance, a list is partitioned into individual `ListItem` elements, but you may opt to combine these elements into a single chunk, provided they still fit within your preferred chunk size.

Starting with a document that has been systematically partitioned into discrete elements, the smart chunking strategies Unstructured offers, allow you to:

- Ensure that information flow remains uninterrupted, preventing mid-word splits that simple character chunking suffers from.

- Control the maximum and minimum sizes of the chunks.

- Guarantee that distinct topics or ideas such as separate sections with different subjects, are not, are not merged.

Smart chunking is a step beyond recursive chunking that actually takes into account the semantic structure and content of the documents.

Smart chunking offers four strategies which differ in how they guarantee the purity of content within chunks:

- “Basic” chunking strategy: This method allows you to combine sequential elements to maximally fill each chunk while respecting the maximum chunk size limit. If a single isolated element exceeds the hard-max, it will be divided into two or more chunks.

- “By title” chunking strategy: This strategy leverages the document element types identified during partitioning to understand the document structure, and preserves section boundaries. This means that a single chunk will never contain text that occurred in two different sections, ensuring that topics remain self-contained for enhanced retrieval precision.

- “By page” chunking strategy (available only in Serverless API): Tailored for documents where each page conveys unique information, this strategy ensures that content from different pages is never intermixed within the same chunk. When a new page is detected, the existing chunk is completed and a new one is started, even if the next element would fit in the prior chunk.

- “By similarity” chunking strategy (available only in Serverless API): When the document structure fails to provide clear topic boundaries, you can use the “by similarity” strategy. This strategy employs the `sentence-transformers/multi-qa-mpnet-base-dot-v1` embedding model to identify topically similar sequential elements and combine them into chunks.

An added advantage of Unstructured’s smart chunking strategies is their universal applicability across diverse document types. You won’t need to hardcode and maintain the separator lists for each document as you would in case of recursive chunking. This allows for easy experimentation with chunk size and chunking strategy, enabling you to pinpoint the optimal approach for any given use case.

Conclusion

Chunking is one of the essential preprocessing steps in any RAG system. The choices you make when you set it up, will influence the retrieval quality, and as a consequence, the overall performance of the system. Here are some considerations to keep in mind when designing the chunking step:

- Experiment with different chunk sizes: While large chunks may contain more context, they also result in coarse representation, negatively affecting the retrieval precision. Optimal size chunk depends on the nature of your documents, but aim to optimize for smaller chunks without losing important context.

- Utilize smart chunking strategies: Opt for chunking strategies that allow you to separate text on semantically meaningful boundaries to avoid interrupting the information flow, or mixing content.

- Evaluate the impact of your chunking choices on the overall RAG performance: Set up an evaluation set for your specific use case, and track how your experiments with chunk sizes and chunking strategies impact the overall performance. Unstructured streamlines chunking experimentation by allowing you to simply tweak a parameter or two, no matter the documents’ type.

Get your Serverless API key today and start your experiments! We cannot wait to see what you build with Unstructured Serverless. If you have any questions, we’d love to hear from you! Please feel free to reach out to our team at hello@unstructured.io or join our Slack community.