Authors

Building effective RAG systems requires processing vast amounts of unstructured data and making it available for retrieval in a consistent, organized way. While Databricks Delta Tables offer robust capabilities for data storage and management, getting unstructured content into them in a format suitable for RAG has been a significant challenge. Data teams often struggle with preprocessing documents, managing schema evolution, and ensuring data consistency when loading processed content into Delta Tables.

Today, we're excited to highlight Unstructured Platform's native integration with Databricks Delta Tables. This integration enables seamless extraction and transformation of unstructured data directly into Delta Tables, complete with proper schema handling and metadata management.

What are Delta Tables?

Delta Tables are an advanced data storage format in Databricks that combines the reliability of traditional databases with the scalability of data lakes. They provide ACID transactions, versioning, and schema enforcement, making them ideal for managing large-scale structured and semi-structured data. When used with Unity Catalog, Delta Tables offer enhanced governance, security, and auditability features crucial for enterprise RAG deployments.

How the Integration Works

The integration allows you to directly stream processed documents from Unstructured Platform into Delta Tables with a predefined schema optimized for RAG applications. Key features include:

- Direct writing of processed document chunks with embeddings and metadata to Delta Tables

- Automatic schema compliance with RAG-optimized table structure

- Support for both SQL warehouse and all-purpose cluster access

- Integration with Unity Catalog for enhanced security and governance

- Support for both personal access token and Databricks managed service principal authentication

- Flexible and easy configuration

Setting Up the Integration

To help you set up your Databricks environment and obtain the necessary credentials, we recorded a few quick videos illustrating all the steps. You can find them on the documentation page. Prerequisites include a Databricks account (AWS, Azure, or GCP), a workspace with either a SQL warehouse or all-purpose cluster, Unity Catalog enabled with appropriate catalog, schema, volume and table setup, and proper authentication credentials (PAT or OAuth).

Configuration Steps in Platform UI

Once you meet all of the prerequisites, setting up Delta Tables in Databricks destination connector is as easy as 1-2-3 within the Unstructured Platform UI:

1) Navigate to the Connectors section, click Destinations, then New.

2) Select Delta Tables in Databricks as the Provider.

3) Fill in the required fields.

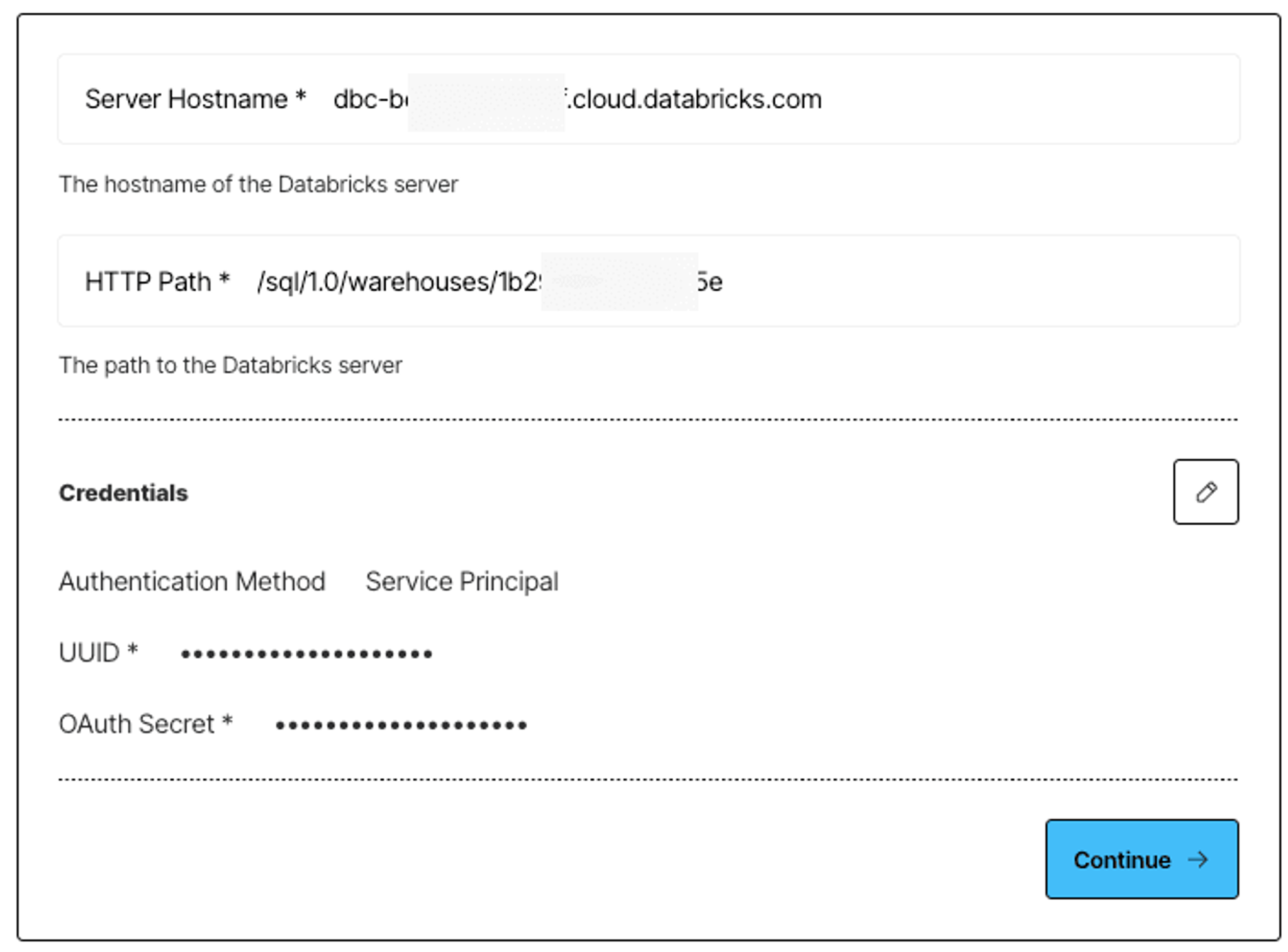

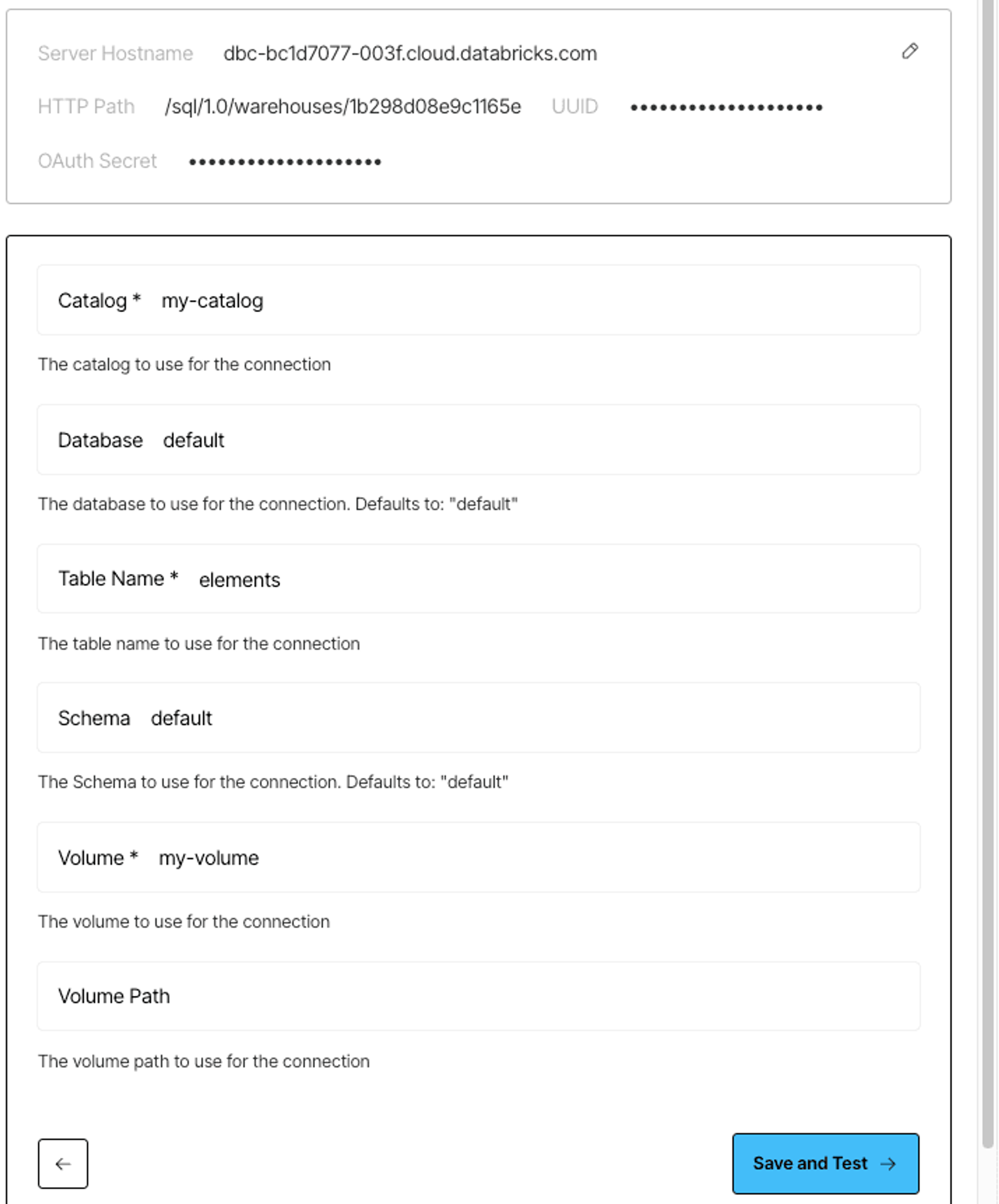

Here’s an example of the destination connector’s settings with a service principal selected as an authentication method:

Configuration via API

You can also choose to create the destination connector using the Platform API:

Get started!

If you're already an Unstructured Platform user, the Delta Tables integration is available in your dashboard already!

Make sure to check out our recent blog post that provides an end-to-end RAG example using this destination connector.

Expert access

Need a tailored setup for your specific use case? Our engineering team is available to help optimize your implementation. Book a consultation session to discuss your requirements here.