Authors

Integrating Unstructured metadata with Pinecone Hybrid Search can significantly enhance Retrieval Augmented Generation (RAG) systems by improving the retrieval of relevant documents for the Large Language Models (LLMs).

Metadata plays a crucial role in enhancing the efficiency of language model applications and search processes. By providing essential information about documents' content, structure, and context, metadata allows for precisely filtering and categorizing document elements. Unstructured metadata tracks general document information, like filename and file type, and more detailed document-specific information, such as element type.

Pinecone Hybrid Search combines semantic and keyword searches using sparse-dense vectors, enabling more comprehensive and relevant search results. Semantic search, enabled by dense vectors, focuses on finding semantically similar results from the documents. On the other hand, sparse vectors are ideal for keyword searches, indicating the importance of specific words. This dual approach ensures that search results are contextually relevant and precisely matched to query keywords. You can refer to Pinecone's documentation on Understanding Hybrid Search.

The synergy of these technologies provides several advantages:

- Precise Document Search: Unstructured metadata and Pinecone Hybrid Search can accurately match search queries with relevant documents.

- High-Efficiency Retrieval: Leveraging structured metadata with Pinecone's hybrid search technology ensures quick access to relevant information in extensive data collections.

- Easy Metadata Filtering: Unstructured pulls across existing file metadata from systems of record and generates new metadata (e.g. element type, hierarchy, xy coordinates, language, and more). This metadata can be used in conjunction with Hybrid Search to further enhance retrieval.

This combination of Unstructured metadata extraction/generation and Pinecone Hybrid Search offers significant improvements in Retrieval Augmented Generation (RAG) systems. By enhancing search on diverse datasets, these technologies empower RAG systems to produce more accurate and relevant results. This blog post demonstrates how to extract and generate metadata using Unstructured and integrate it with Pinecone Hybrid Search for enhanced data retrieval capabilities. You can follow the code in this Google Colab notebook.

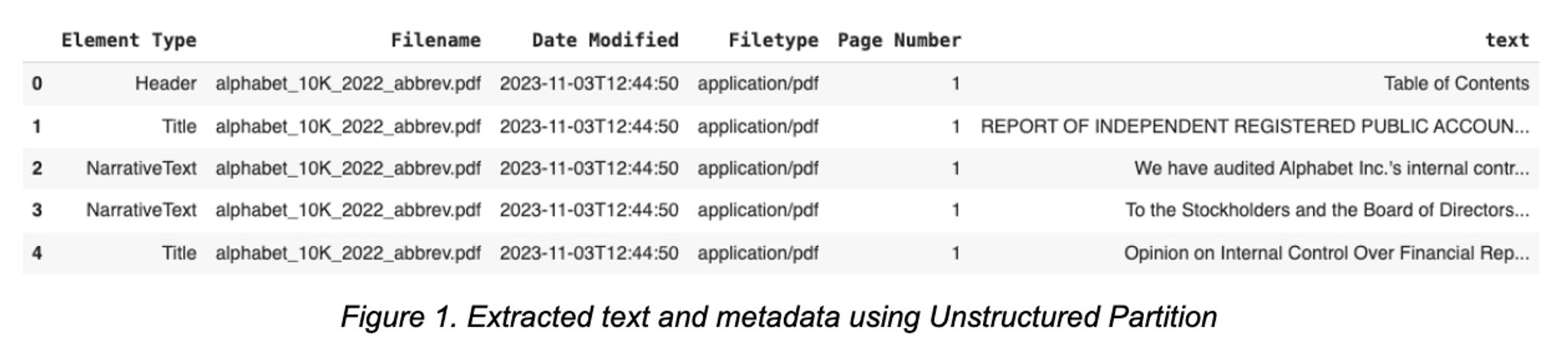

Extracting Text and Metadata from PDF Documents

The process begins with the transformation of data from PDF documents. Utilizing the partition_pdf method, we can render a PDF into clean JSON, broken down to individual document elements. This method identifies and separates logical bodies of text within a document, such as text, tables, and images. The ability to precisely render these elements is crucial in generating a detailed metadata repository that enhances the accuracy of data retrieval.

Metadata Selection for Storage and Retrieval in Pinecone

After transforming the PDF into JSON with relevant metadata using Unstructured, this data is converted into a Pandas DataFrame. This transformation from JSON into DataFrame format will be useful in the later step when we bulk-upsert data into Pinecone Index. The DataFrame also provides a structured and intuitive way to organize and access the documents in the Pinecone vector database.

Unstructured metadata provides advanced features, such as “parent_id”, representing document hierarchy that could be useful for context-aware chunking in a RAG architecture. For this example, however, we select a few metadata we want to use for storage and retrieval. This includes Element Type, Filename, Date Modified, Filetype, Page Number, and Text. Each type of metadata serves a specific purpose:

- Element Type helps categorize the data for more efficient retrieval.

- Filename and Date Modified offer context and version control.

- Filetype and Page Number provide structural information.

- Text is the core content used for retrieval and LLMs.

Storing Documents by Elements Metadata

The bifurcation of document storage into Sparse and Dense data storage in Pinecone Hybrid Search is designed to optimize search and retrieval processes. This dual strategy ensures that text and metadata are stored and accessed most efficiently for their specific purposes, thereby enhancing the overall effectiveness of the RAG architecture.

Storage of documents is divided into two categories:

- Sparse Data Storage: Here, we store metadata like Filename, Element Type, and Page Number. This approach is akin to creating an index that helps quickly reference and retrieve documents.

- Dense Data Storage: This involves storing vectorized text. Vectorization transforms text data into a numerical format, which are essential for semantic search. The dense data is crucial in the search capability beyond the keywords matching.

Initialize and Create Pinecone Index

This step explains how to initialize and create a Pinecone index for storing document data. First, you will need to generate a Pinecone API key. The process involves creating a new Pinecone project, defining the index name, and creating the index with specific dimensions and metrics. The code below demonstrates how to: initialize a connection to Pinecone, check for existing indexes, create a new index, and ensure its readiness.

Note: as of Jan 16, 2024, Pinecone has introduced a new API with the release of pinecone-client version 3.0.0. For a comprehensive list, see the Python client v3 migration guide.

Define Sparse and Dense Vectors

Sparse vectors, created using the BM25Encoder, are particularly useful for keyword-based data. Dense vectors, generated using SentenceTransformer models, capture the semantic essence of the text.

Upload the Documents to the Pinecone Index

Finally, we upload the documents to the Pinecone index. We batch the documents (metadata and text batches), encode them into sparse and dense vectors, and then insert them into the Pinecone index. Using tqdm for progress visualization and the step-by-step upsert process, we ensure efficient and organized data upload.

Retrieval and Filtering with Pinecone Hybrid Search

The ability to retrieve exactly what's needed — such as specific numerical data from tables — is arguably the most crucial step in building an accurate, reliable RAG system. It's not just about finding data; it's about finding the right data, quickly and accurately.

Pinecone Hybrid Search facilitates efficient data storage and provides advanced retrieval and filtering techniques. For instance, users can filter retrieved data based on Element Type, such as Tables, which is particularly useful in scenarios requiring specific data formats.

Pinecone facilitates this selective retrieval by allowing users to attach metadata key-value pairs to vectors in an index. When querying, users can specify filter expressions based on this metadata. This enables precise and relevant search results, like filtering for only “Table” elements in a set of documents. For more details, refer to Pinecone's documentation on Filtering with Metadata.

Basic Retrieval

This code below demonstrates the retrieval of documents from the Pinecone index leveraging a combination of sparse and dense vectors. A query is first vectorized into sparse and dense formats and then passed into the Pinecone index query function. This method returns the top results that best match the query regarding semantic relevance and keyword match.

Filtering Retrieval for Tables Only

Finally, we will demonstrate how to use metadata filtering to search exclusively for “Table” elements. This feature is particularly useful for applications where tables, such as academic research or business analytics, are essential for data analysis. By enabling searches targeted solely at “Table” elements, users can efficiently extract data like research statistics or financial figures from various documents.

The process is similar to the standard retrieval but requires an additional metadata filter. This ensures that the search results are confined to documents that match the query semantically and specifically belong to the "Table" category.

Conclusion

Combining Unstructured text and metadata extraction with Pinecone Hybrid Search in RAG systems offers a unique data storage and retrieval approach. This combination enhances the accuracy and relevance of the information retrieved and streamlines the process, making it more efficient and user-friendly. As we continue to delve deeper into the era of big data, such innovations will play a critical role in harnessing the full potential of unstructured datasets.

Providing the rich metadata extracted by Unstructured to Pinecone’s Hybrid Search unlocks even more accurate and efficient retrieval, a key foundation for building robust RAG systems. To name a few, this metadata includes things like granular element type, document name, source URL, page number, languages used, date created, date last modified, and hierarchy. When we feed this rich data to Hybrid Search, we narrow responses to a user’s question to pinpoint the precise relevant content. To unlock this search across all their documents, users need an ingestion and preprocessing workflow, pulling and feeding through documents in several different formats and locations. In addition to metadata generation, users will need element extraction and chunking. Fortunately, for all of this, Unstructured has you covered. It supports 25 different file types and 30+ source/destination connectors. It also has smart chunking capabilities to make sure the entries in your Pinecone database are always cohesive and logically complete entities (no critical, missing content).

About Pinecone

Pinecone has built the first vector database to enable the next generation of artificial intelligence (AI) applications in the cloud. Its engineers built ML platforms at AWS, DataBricks, Yahoo, Google, and Splunk, and its scientists published more than 100 academic papers and patents on machine learning, data science, systems, and algorithms. Pinecone operates in Silicon Valley, New York, and Tel Aviv. For more information, see https://www.pinecone.io.

About Unstructured

Unstructured is the leading provider of LLM data preprocessing solutions, empowering organizations to transform their internal unstructured data into formats compatible with large language models. By automating the transformation of complex natural language data found in formats like PDFs, PPTX, HTML files, and more, Unstructured enables enterprises to leverage the full power of their data for increased productivity and innovation. With key partnerships and a growing customer base of over 35,000 organizations, Unstructured is driving the adoption of enterprise LLMs worldwide. To learn more, connect with us directly in our community Slack.