Authors

In this tutorial, we will focus on using the Unstructured tools to ingest and preprocess the dataset for LLM consumption and chunking strategy by document elements. The codes for this blog post are available on Google Colab.

Introduction

Web scraping and text chunking are foundational techniques for gathering and preparing clean data from the internet, especially when working with Large Language Models (LLMs). This blog post will explore an easy-to-use solution using the Unstructured library for both tasks. We’ll explore how to scrape content from websites effectively and chunk it into manageable pieces, ensuring you maintain the essential context for your LLM.

Ingesting and Preprocessing Data from Websites with Unstructured

The dataset for this webinar is derived from the Arize AI documentation from its websites. Ingesting text content from HTML documents can often be a complex task involving parsing HTML, identifying relevant sections, and extracting the text. This process can get particularly complicated when the webpage has an elaborate layout or is filled with ads and other distractions.

The Unstructured library offers an elegant solution: the partition_html function. This function partitions an HTML document into document element objects, simplifying the ingestion process with just one line of code. Here’s how you can use it:

from unstructured import partition_html# Using partition_html to ingest HTML contentdocument_elements = partition_html(url=input_html)

This function accepts inputs from HTML files, text strings, or URLs and provides a unified interface to process HTML documents and extract meaningful elements.

This function is highly configurable with optional arguments. For example, you can:

- determine whether to use SSL verification in the HTTP request,

- include or ignore content within <header> or <footer> HTML tags, and

- choose the encoding method for the text input.

After scraping the data, you can store it in a structured format for further downstream tasks, such as storing it in a vector database or fine-tuning LLMs. JSON is often the go-to choice for this.

The partition_html function will return a list of Element objects representing the elements of the HTML document in JSON that can be malleable into any schema. In our notebook example, the scraped data is stored with the following schema:

{ 'metadata': { 'source': <url>, 'title': <page title> }, 'page_content': <text>}

The metadata contains information about the data source, including the source URL and page title. The page_content holds the main textual content extracted from the page.

Chunking Long Website Content

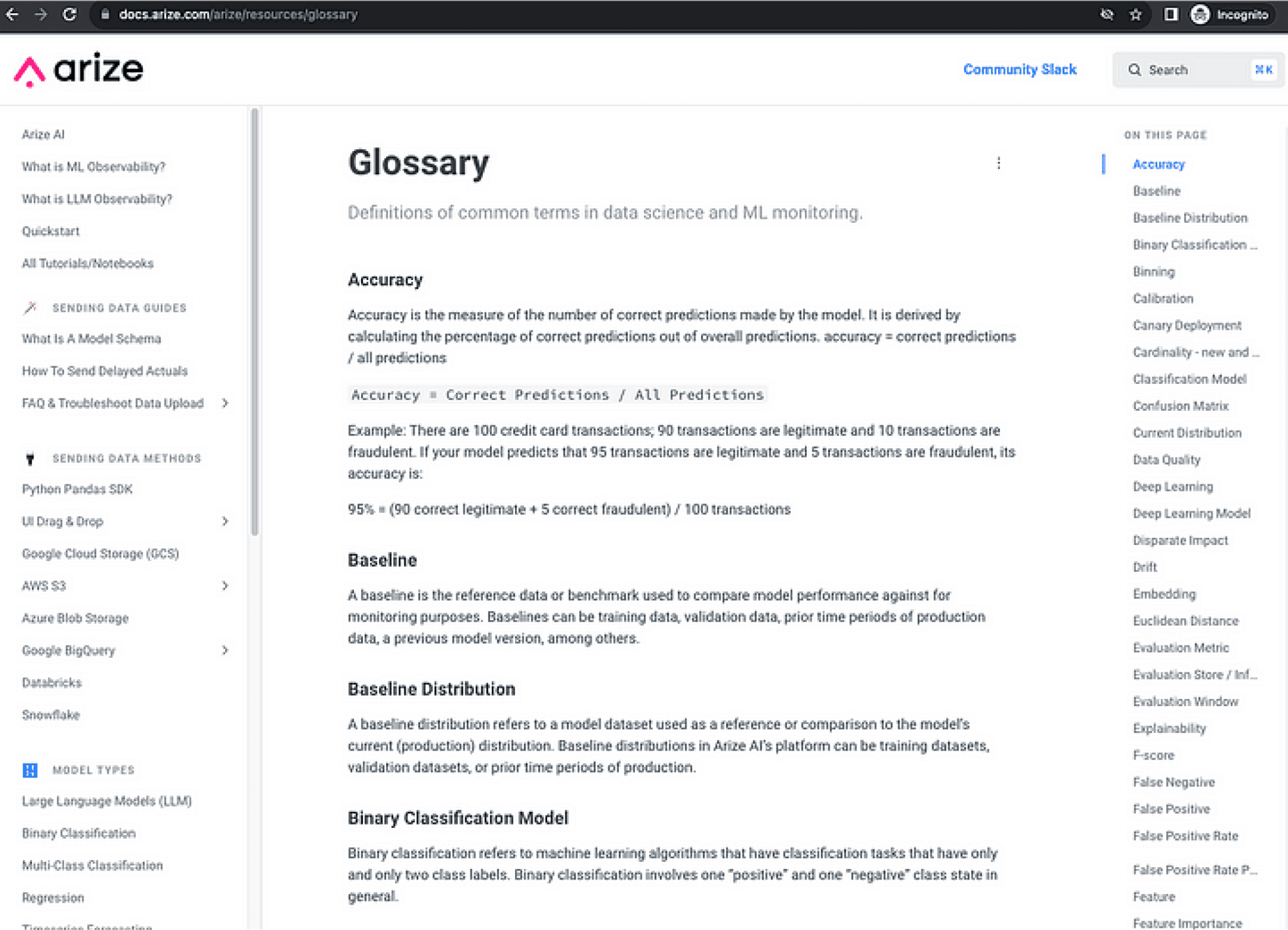

When dealing with extensive webpages like the Arize AI Glossary page, the textual content can often be too lengthy for a Large Language Model to process in a single pass. This necessitates breaking the content into manageable chunks while maintaining the essential context.

Fig 1. Arize AI Glossary page

In general, we can use two chunking strategies:

- Fixed-Size Chunking: While simple to implement, it can lose relevant context, mainly when vital information is split between chunks.

- Context-Aware Chunking: If we inspect the glossary page, we will see the terminology is embedded in the HTML “heading” tag and the description in the “span” tag. The partition_html can automatically detect the document elements.

The `Unstructured` library offers a more nuanced approach: context-aware chunking from the extracted metadata. Using the `partition_html` function, we can maintain the hierarchical relationship between different types of HTML elements.

We can group the HTML elements in a hierarchy. By utilizing the element type identified by `partition_html,` context-aware chunking maintains the logical structure and coherence of the HTML content.

Here’s how it works:

- Identify Element Types: The function classifies elements as `HTMLTitle` for subheaders or `HTMLNarrativeText` for regular texts.

- Group by Context: Elements are grouped iteratively, ensuring that titles are followed by their related narrative texts.

Implementation:

source = 'https://docs.arize.com/arize/resources/glossary'title = 'Glossary'all_groups = []group = {'metadata': {'source': source, 'title': title}, 'page_content': ''}# ingest and preprocess webpage into Unstructured elements objectglossary_page = partition_html(url=source)# iterate the document elements and group texts by titlefor element in glossary_page: if 'unstructured.documents.html.HTMLTitle' in str(type(element)): # If there's already content in the group, add it to all_groups if group['page_content']: all_groups.append(group) group = {'metadata': {'source': source, 'title': title}, 'page_content': ''} group['page_content'] += element.text elif 'unstructured.documents.html.HTMLNarrativeText' in str(type(element)): group['page_content'] += '. ' + element.text# Add the last group if it existsif group['page_content']: all_groups.append(group)# Print the groupsfor group in all_groups[:3]: print(group)

In the Unstructured v0.10.9 release, we implemented a new functionality: chunk_by_title. This new functionality will simplify your code to group the elements hierarchically. The `chunk_by_title` function combines elements into sections by looking for the titles’ presence. When a title is detected, a new section is created. Tables and non-text elements (such as page breaks or images) are always their sections.

Conclusion

Web scraping and text chunking are essential steps in data preparation for Large Language Models. The `Unstructured` library offers a straightforward yet powerful way to handle these tasks effectively. Using the `partition_html` function, you can quickly ingest and chunk website content while maintaining its essential context, making your data more valuable and usable for your LLMs. With these techniques in your toolkit, you’re well on your way to becoming a master of data ingestion and preparation for Large Language Models.

We would like to hear your feedback. Join Unstructured Community Slack here.